At RockinDev, we adopted llama.cpp as our AI inference framework of choice. Thanks to llama.cpp supporting NVIDIA’s CUDA and cuBLAS libraries, we can take advantage of GPU-accelerated compute instances to deploy AI workflows to the cloud, considerably speeding up model inference.

Let’s get to it! 🥳

Getting started

For this tutorial we’ll assume you already have a Linux installation ready to go with working NVIDIA drivers and a container runtime installed (we’ll use Podman but Docker should work pretty similarly).

Installing NVIDIA container toolkit

The first step in building GPU-enabled containers on Linux is installing NVIDIA’s container toolkit (CTK). To set it up check out NVIDIA’s official docs and look for instructions for your specific Linux distribution. We’ll be installing CTK on Fedora Linux.

Fedora setup

First, add the NVIDIA RPM package repository:

$ curl -s -L https://nvidia.github.io/libnvidia-container/stable/rpm/nvidia-container-toolkit.repo | \

sudo tee /etc/yum.repos.d/nvidia-container-toolkit.repoNow we can install CTK by running:

$ sudo dnf install -y nvidia-container-toolkitCheck that CTK was installed correctly:

$ nvidia-ctk --version

NVIDIA Container Toolkit CLI version 1.13.5

commit: 6b8589dcb4dead72ab64f14a5912886e6165c079

Next, we need to configure Linux and Podman to access your GPU resources. In order to “bridge” GPU resources from your host to the Podman container runtime, we need to generate a container device interface (CDI for short), which is a way for containers to access your GPU device:

$ sudo nvidia-ctk cdi generate --output=/etc/cdi/nvidia.yamlThis will create the corresponding CDI configuration for your GPU.

Troubleshooting: Keep in mind that whenever you update your NVIDIA drivers, you’ll have to regenerate your CDI config. If you ever get an error running containers with GPU access similar to the error below then you probably need to regenerate your CDI configuration:

Error: crun: cannot stat /lib64/libEGL_nvidia.so.550.54.14: No such file or directory: OCI runtime attempted to invoke a command that was not found.

Voilà! You should now be able to run GPU-enabled containers locally.

Building llama.cpp

Clone the llama.cpp code repo locally and cd into it:

$ git clone https://github.com/ggerganov/llama.cpp.git

$ cd llama.cppllama.cpp already comes with Dockerfiles ready to build llama.cpp images. But first, we’ll make a couple of tweaks to make sure were running on the latest CUDA version.

Edit the file .devops/server-cuda.Dockerfile and make the following changes:

ARG UBUNTU_VERSION=22.04

# This needs to generally match the container host's environment.

ARG CUDA_VERSION=12.4.1 # <-- update to latest CUDA version

# Target the CUDA build image

ARG BASE_CUDA_DEV_CONTAINER=nvidia/cuda:${CUDA_VERSION}-devel-ubuntu${UBUNTU_VERSION}

# Target the CUDA runtime image

ARG BASE_CUDA_RUN_CONTAINER=nvidia/cuda:${CUDA_VERSION}-runtime-ubuntu${UBUNTU_VERSION}

FROM ${BASE_CUDA_DEV_CONTAINER} as build

# Unless otherwise specified, we make a fat build.

ARG CUDA_DOCKER_ARCH=all

RUN apt-get update && \

apt-get install -y build-essential git libcurl4-openssl-dev

WORKDIR /app

COPY . .

# Set nvcc architecture

ENV CUDA_DOCKER_ARCH=${CUDA_DOCKER_ARCH}

# Enable CUDA

ENV LLAMA_CUDA=1

# Enable cURL

ENV LLAMA_CURL=1

RUN make server # <-- just build the server target

FROM ${BASE_CUDA_RUN_CONTAINER} as runtime

RUN apt-get update && \

apt-get install -y libcurl4-openssl-dev

COPY --from=build /app/server /server

ENTRYPOINT [ "/server" ]Update CUDA_VERSION to the latest available version so that we base our build on the most up-to-date CUDA library version (12.4.1 as of this writing).

Next, change RUN make to RUN make server so that we only build the server make target instead of building the full project.

Now let’s build our llama.cpp container:

$ podman build -t llama.cpp-server:cuda-12.4.1 -f .devops/server-cuda.Dockerfile .If everything went well we should have our llama.cpp image built correctly:

$ podman image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/llama.cpp-server cuda-12.4.1 48b3591da01b 2 minutes ago 2.45 GBAs you can see, the resulting image is pretty large. That’s because llama.cpp’s Dockerfile uses an official CUDA base image containing many NVIDIA utility libraries which are mostly not needed (except for cuBLAS). One way to trim this down would be to build a custom, lighter CUDA container image but that’s for another day.

Running AI model locally

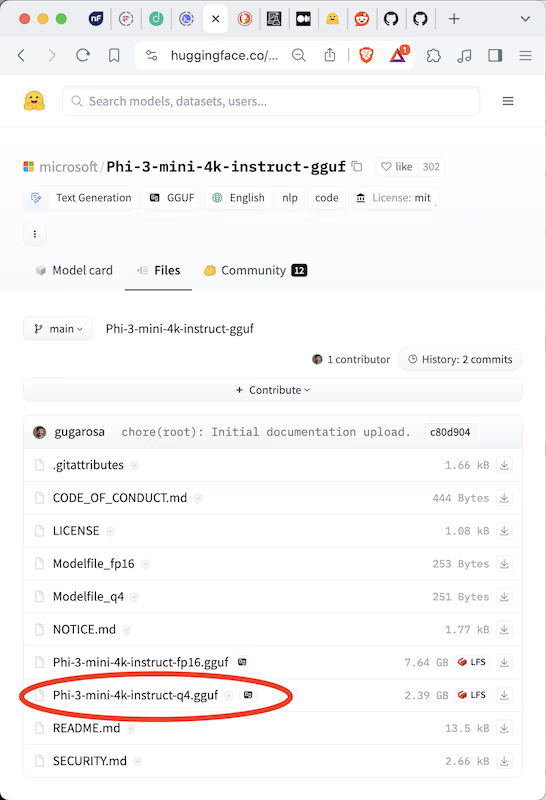

Let’s now download a language model so we can run local inference on our containerized llama.cpp server. We’ll use Microsoft’s Phi 3 language model, which is available in GGUF format in its quantized form, making it suitable to run locally on modest RAM resources. You can find it in Microsoft’s HuggingFace repo here. Go to the Files and versions section and download the file named Phi-3-mini-4k-instruct-q4.gguf:

Once downloaded, we are ready to run our llama.cpp server and mount Phi 3 locally (make sure to replace <path to local model directory> with the path to the directory where you downloaded the model):

$ podman run --name llama-server \

--gpus all \

-p 8080:8080 \

-v <path to local model directory>:/models \

llama.cpp-server:cuda-12.4.1 \

-m /models/Phi-3-mini-4k-instruct-q4.gguf \

--ctx-size 4096 \

--n-gpu-layers 99After running the server you should be able to notice the following interesting bit of output:

ggml_cuda_init: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 3060 Laptop GPU, compute capability 8.6, VMM: yes

llm_load_tensors: ggml ctx size = 0.30 MiB

llm_load_tensors: offloading 32 repeating layers to GPU

llm_load_tensors: offloading non-repeating layers to GPU

llm_load_tensors: offloaded 33/33 layers to GPU

llm_load_tensors: CPU buffer size = 52.84 MiB

llm_load_tensors: CUDA0 buffer size = 2157.94 MiBLet’s point out a couple of important bits here:

- The

--n-gpu-layersflag tells llama.cpp to fit as many as 99 layers into your GPU’s video RAM. The more model layers you fit in VRAM the faster inference will run. - The

--ctx-sizeflag tells llama.cpp the prompt context size for our model (i.e. how large our prompt can be). - Seeing

ggml_cuda_init: found 1 CUDA devicesmeans llama.cpp was able to access your CUDA-enabled GPU, which is a good sign. llm_load_tensors: offloaded 33/33 layers to GPUtells us that out of all 33 layers the Phi 3 model contains, 33 were offloaded to the GPU (i.e. all of them in our case, but yours might look different).

Let’s get-a-prompting!

It’s time to test out our llama.cpp server. The server API exposes several endpoints, we’ll use the /completion endpoint to prompt the model to give us a summary of a children’s book:

$ curl --request POST \

--url http://localhost:8080/completion \

--header "Content-Type: application/json" \

--data '{"stream": false, "prompt": "<|system|>\\nYou are a helpful AI assistant.<|end|>\\n<|user|>\\nSummarize the book the little prince<|end|>\\n<|assistant|>"}'The server should return something like this (redacted for brevity):

{

"content": " \"The Little Prince,\" written by Antoine de

Saint-Exupéry, is a philosophical tale that follows the journey

of a young prince from a small, asteroid-like planet. The story

is narrated by a pilot who crashes in the Sahara Desert after

fleeing a damaged plane.\n\nThe Little Prince, the prince

himself, shares stories of his adventures and encounters with

various inhabitants of different planets as he visits them.

During his travels, he meets a range of unique characters,

including a king, a vain businessman, a drunkard, a lamplighter,

and a snake, each representing different human traits and

behaviors.\n\nThe most significant relationships the Little

Prince forms are with a rose on his home planet and a fox on

Earth. His rose symbolizes love, innocence, and beauty, while the

fox represents friendship, companionship, and the complexity of

human relationships.\n\nThe narrative explores themes such as the

nature of relationships, the importance of keeping one's

promises, the essence of responsibility, and the meaning of love

and friendship. It emphasizes the value of imagination, the

pursuit of knowledge, and the power of human connection.

\n\nUltimately, the book is a poignant, philosophical allegory

about the significance of relationships, the importance of

holding onto childhood innocence, and the wonder and mystery of

the universe.\n\n\"The Little Prince\" remains a cherished

classic, captivating readers of all ages with its enchanting

stories, timeless wisdom, and evocative imagery.<|end|>",

...

"tokens_predicted": 340,

"tokens_evaluated": 25,

"generation_settings": {...},

"prompt": "<|system|>\nYou are a helpful AI assistant.<|end|>\n<|user|>\nSummarize the book the little prince\n<|assistant|>",

...

}

}The content field contains the generated response from our model (parsed for readability):

“The Little Prince,” written by Antoine de Saint-Exupéry, is a philosophical tale that follows the journey of a young prince from a small, asteroid-like planet. The story is narrated by a pilot who crashes in the Sahara Desert after fleeing a damaged plane.

The Little Prince, the prince himself, shares stories of his adventures and encounters with various inhabitants of different planets as he visits them. During his travels, he meets a range of unique characters, including a king, a vain businessman, a drunkard, a lamplighter, and a snake, each representing different human traits and behaviors.

The most significant relationships the Little Prince forms are with a rose on his home planet and a fox on Earth. His rose symbolizes love, innocence, and beauty, while the fox represents friendship, companionship, and the complexity of human relationships.

The narrative explores themes such as the nature of relationships, the importance of keeping one’s promises, the essence of responsibility, and the meaning of love and friendship. It emphasizes the value of imagination, the pursuit of knowledge, and the power of human connection.

Ultimately, the book is a poignant, philosophical allegory about the significance of relationships, the importance of holding onto childhood innocence, and the wonder and mystery of the universe.

“The Little Prince” remains a cherished classic, captivating readers of all ages with its enchanting stories, timeless wisdom, and evocative imagery.

Time to pat yourself in the back for becoming a true AI whisperer. Happy prompting!